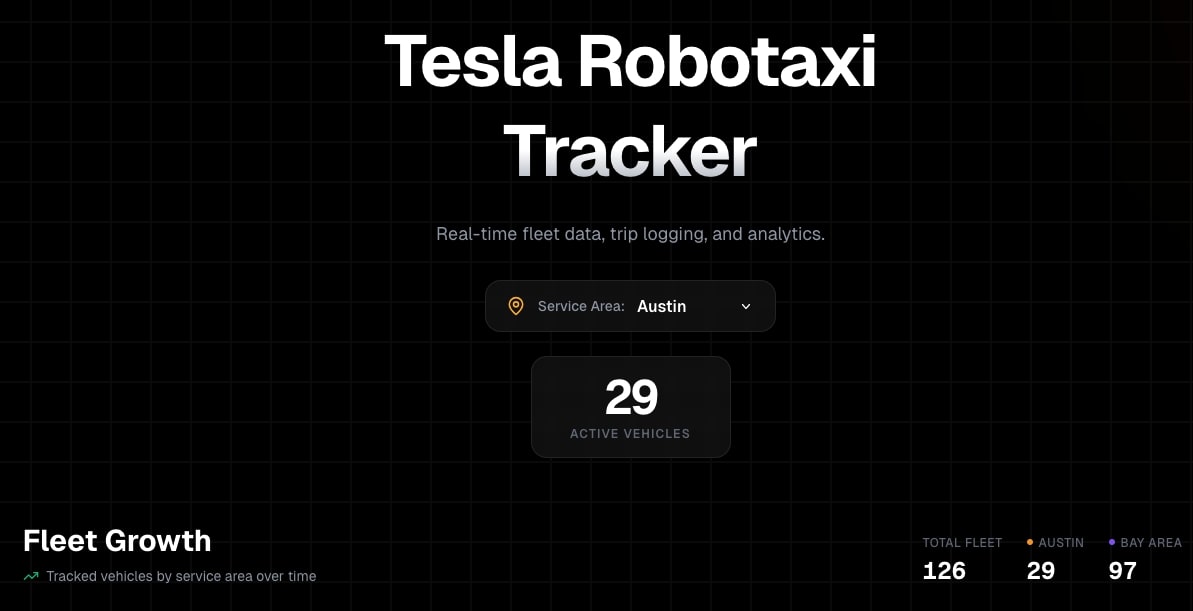

Inside Tesla’s Growing Robotaxi Fleet: What 29 Cars in Austin Reveal About the Self-Driving Future

A recent Tesla robotaxi tracker highlighted 29 identifiable robotaxi-style Teslas operating in Austin and about 97 in the San Francisco Bay Area, based on unique license plates and visual cues. While this is still far from a full commercial robotaxi rollout, it signals that Tesla is actively seeding and testing an autonomous ride-hailing network in real city environments. This article unpacks what those numbers really mean, how Tesla’s approach compares with competitors, and why Austin has become one of the most closely watched proving grounds for self-driving technology.

Mission Overview: Tesla’s Robotaxi Vision in Context

Tesla’s long-stated mission is to “accelerate the world’s transition to sustainable energy.” Robotaxis are a crucial extension of that mission: instead of each person owning a car that sits idle 90–95% of the time, a fleet of autonomous electric vehicles (EVs) could provide shared, on‑demand mobility with dramatically lower emissions and total cost per mile.

The Austin and Bay Area sightings matter because they shift the robotaxi conversation from PowerPoint slides to pavement. They show:

- Real VINs and license plates associated with vehicles configured for high‑utilization service.

- Operation in complex, mixed-traffic environments rather than closed test tracks.

- Geographic clustering around key markets where Tesla has strong brand presence and regulatory engagement.

“Autonomous vehicles are not just a software project; they’re a systems-level transformation of transportation, cities, and energy use.”

— Dr. Missy Cummings, autonomous systems researcher and former NHTSA advisor

Why Austin and the Bay Area Are Key Testbeds

Two regions dominate current Tesla robotaxi spotting data:

- Austin, Texas (29 tracked vehicles): Home to Tesla’s Gigafactory Texas and a rapidly growing tech population, Austin combines diverse road infrastructure (suburban sprawl, dense downtown, highways) with relatively innovation-friendly local governments.

- San Francisco Bay Area (~97 tracked vehicles): One of the most heavily instrumented self-driving labs in the world, already hosting robotaxi services from Waymo and Cruise (when operating). Tesla gains both competitive benchmarking and high‑complexity driving data.

Community trackers typically aggregate:

- Public sightings reported via social media or dedicated apps.

- License plate ranges suspected to be part of pilot fleets.

- Visual characteristics such as sensor placements or distinctive registration clusters.

This bottom‑up data does not equal an official commercial service, but it provides an early “heat map” of where Tesla is most active.

Technology: How Tesla Robotaxis Perceive and Drive

Tesla’s robotaxi strategy rests on a controversial but technically coherent bet: full autonomy can be achieved without expensive lidar, using primarily cameras, onboard compute, and large‑scale neural networks trained on fleet data.

Core Technical Pillars

- Vision-Only Perception: Tesla’s modern vehicles use a suite of cameras providing 360° coverage. Neural networks perform:

- Object detection (vehicles, pedestrians, cyclists, traffic cones).

- Semantic segmentation (lanes, curbs, road edges, drivable space).

- Depth and motion estimation from video, not lidar point clouds.

- End-to-End Neural Planning: Increasingly, Tesla is experimenting with end‑to‑end models that map raw video directly to driving actions (steering, acceleration, braking), supervised by human driver behavior and safety filters.

- Onboard Supercomputer: Newer Teslas include custom AI accelerators (e.g., Tesla’s FSD computer) capable of running complex networks in real time with low latency and high redundancy.

- Fleet Learning: Millions of consumer vehicles act as data collectors. Edge‑case events (near misses, unusual lane markings, heavy rain) are uploaded and used to retrain models at Tesla’s centralized compute clusters, including its Dojo AI supercomputer.

“You don’t get to real-world autonomy by hand‑coding every rule. You get there by learning from billions of miles of human and AI driving data.”

— Elon Musk, CEO of Tesla, speaking at Tesla AI Day

Hardware and Vehicle Design for Robotaxis

While Tesla can, in theory, upgrade existing customer vehicles into part‑time robotaxis, dedicated fleet vehicles are likely to optimize for:

- Maximized passenger space and easy ingress/egress.

- Durable interiors designed for high daily utilization.

- Simplified driver controls (or fully driverless cabin) in later stages.

- High‑cycle battery packs tuned for many short trips per day.

Scientific Significance: Data, Safety, and Urban Systems

Autonomous robotaxis represent a convergence of several scientific and engineering disciplines:

- Machine learning and computer vision for perception and behavior prediction.

- Control theory for reliable vehicle dynamics and trajectory tracking.

- Human factors and cognitive science for understanding how people interact with AVs.

- Urban planning and systems modeling for assessing large‑scale impacts on congestion and emissions.

Robotaxi deployments in cities like Austin create invaluable real‑world laboratories:

- Safety Modeling: Comparing incident rates per million miles between AVs and human drivers.

- Traffic Flow Analysis: Quantifying how AVs interact with human drivers at intersections, merges, and bottlenecks.

- Environmental Impact: Estimating reductions in local pollutants and CO₂ when ICE ride‑hail trips are displaced by EV robotaxis.

Studies such as the RAND reports on autonomous vehicle safety emphasize that earlier deployments—even at imperfect safety levels—may save more lives overall if they are already safer than human driving.

Milestones: From FSD Beta to Emerging Robotaxi Fleets

Tesla’s path toward robotaxis has been incremental, with overlapping software, hardware, and regulatory milestones. The tracker’s 29 Austin vehicles sit on top of several years of groundwork.

Key Milestones to Date

- 2016–2019: First Autopilot and Enhanced Autopilot releases for highway driving assistance.

- 2020–2023: Expansion of “Full Self‑Driving” (FSD) Beta to a broad early‑access user base, moving from highway to city streets.

- 2023–2024: Rapid iteration of end‑to‑end neural networks, improving unprotected turns, complex merges, and roundabouts.

- 2024–2025: Increased clustering of “fleet‑style” vehicles in key cities like Austin and the Bay Area, as highlighted by community trackers.

Independent analysts like Brian Wang of NextBigFuture track these deployments as leading indicators of when Tesla might flip from “testing” to “service” in specific corridors or time windows.

Tools and Data Behind Community Robotaxi Trackers

Community-run robotaxi trackers typically integrate:

- Publicly visible license plate sequences registered in specific blocks.

- Photo and video reports tagged on platforms like X (Twitter), Reddit, and dedicated forums.

- Heuristics about fleet registration patterns and common staging locations (e.g., near service centers or factories).

These tools are not official sources, but when corroborated by multiple sightings and independent observers, they form a reasonably robust signal of underlying activity.

Challenges: Regulation, Public Trust, and Technical Edge Cases

The presence of 29 robotaxi‑style Teslas in Austin does not mean Tesla has “solved” autonomy. Major hurdles remain across several dimensions.

1. Regulatory and Legal Frameworks

Autonomous vehicles operate under a patchwork of federal guidance and state or city‑level regulations. Key open questions include:

- Who is legally responsible in a crash involving a driverless robotaxi?

- What minimum safety performance and reporting standards should be required?

- How should cities manage AV fleet caps, curb space, and integration with public transit?

States like Texas and Arizona have been comparatively welcoming to AV testing, but national standards are still evolving.

2. Technical Edge Cases and Adverse Conditions

Even with billions of training miles, rare and complex scenarios can challenge current systems:

- Unpredictable pedestrian or cyclist behavior.

- Construction zones with missing or ambiguous signage.

- Heavy rain, fog, or glare that compromises camera visibility.

Researchers such as Prof. Lex Fridman at MIT, who has extensively studied driver assistance systems, emphasize the importance of rigorous real‑world validation and transparent safety metrics.

3. Public Perception and Trust

Adoption isn’t just a function of technical capability. Surveys show that many people remain hesitant about fully driverless rides, especially after high‑profile incidents involving other AV companies. Clear communication, transparent reporting, and consistent, predictable behavior on the road will be essential for building confidence.

“Trust in automation grows slowly and can be destroyed quickly. The burden is on developers to earn that trust through performance and openness.”

— Prof. John Lee, human factors engineer and automation safety expert

Practical Implications: Economics, Jobs, and Everyday Mobility

If Tesla successfully scales robotaxis in Austin and beyond, the impact will extend far beyond tech headlines.

Economic Model and Cost per Mile

Robotaxis could lower the cost of urban rides by removing the driver’s labor cost and improving vehicle utilization. Analysts often compare:

- Current ride‑hail costs (e.g., Uber/Lyft) at roughly $1.50–$3.00 per mile in many U.S. cities.

- Theoretical robotaxi costs as low as $0.25–$0.70 per mile once scaled, depending on energy prices and maintenance.

Impact on Professional Drivers

A full transition to robotaxis could disrupt ride‑hail, taxi, and even some delivery jobs. Policy responses might include:

- Targeted retraining programs for affected workers.

- Incentives for AV companies to create new roles in fleet operations and maintenance.

- Phased rollouts that prioritize complementing, not instantly replacing, human drivers.

Urban and Environmental Effects

With the right policies, EV-based robotaxis could reduce:

- Tailpipe emissions in dense urban cores.

- Parking demand, freeing land for housing or green space.

- The total number of vehicles needed to provide mobility services.

Further Learning: Books and Resources on the Autonomous Future

For readers who want a deeper, book‑length exploration of self‑driving technology and its societal effects, consider:

- Autonomy: The Quest to Build the Driverless Car—And How It Will Reshape Our World by Lawrence D. Burns and Christopher Shulgan, which provides historical and technical context for today’s AV efforts.

- The Robot Car Revolution , discussing policy and economic dimensions of driverless mobility.

Recommended Talks, Papers, and Media

To complement the Austin robotaxi tracker insights, the following resources provide authoritative background:

- Tesla AI Day (YouTube) — Deep dive into Tesla’s AI stack, including vision, planning, and training infrastructure.

- NHTSA Automated Vehicle Guidance — U.S. regulatory perspective and safety frameworks.

- Nature paper on large-scale driving datasets and machine learning — Context for how big data enables better AV performance.

- Brian Wang on LinkedIn — Futurist and author at NextBigFuture, frequently analyzing Tesla and robotaxi trends.

Conclusion: Reading the Road Ahead from 29 Robotaxis in Austin

The tracker showing 29 Tesla robotaxis in Austin and nearly a hundred in the Bay Area is more than a curiosity; it is an early snapshot of a structural shift in mobility. These vehicles embody a decade of progress in AI, hardware, and electric powertrains—and they preview a world where on‑demand, low‑emission transportation could be cheaper and more accessible than car ownership for many people.

Major questions remain about regulation, labor impacts, and long‑term safety performance. Yet the direction of travel is clear: autonomy is moving from lab prototypes and glossy keynotes onto real city streets. For policymakers, engineers, and everyday riders in cities like Austin, now is the time to engage with how robotaxis should be deployed, governed, and integrated into the broader transportation ecosystem.

Watching the growth of trackers like the one cited by NextBigFuture offers a practical way to gauge real‑world deployment, separate hype from measurable activity, and understand how quickly the self‑driving future is arriving—one license plate at a time.

What to Watch Next as a Curious Observer

If you want to follow the evolution of Tesla’s robotaxi efforts in Austin and elsewhere, here are concrete signals to track over the next 12–24 months:

- Growth in the number and geographic diversity of identified fleet vehicles.

- Any official announcements of limited commercial pilot routes or times.

- Regulatory filings or safety reports related to driverless operation.

- Independent third‑party safety analyses comparing AV and human driver performance.

Combining grassroots tracking data with official communications and rigorous research will give you the clearest possible view of when robotaxis move from “beta” to an everyday option in cities like Austin.

References / Sources

- NextBigFuture – Tesla Robotaxi Tracker coverage: https://www.nextbigfuture.com

- Tesla AI and Autonomy: https://www.tesla.com/AI

- NHTSA Vehicle Automation: https://www.nhtsa.gov/vehicle-automation

- RAND Corporation – Autonomous Vehicle Safety: https://www.rand.org/pubs/research_reports/RR2150.html

- Wikimedia Commons images used under appropriate Creative Commons licenses: https://commons.wikimedia.org