Why AI Companions Are Exploding Online: The New Economics, Ethics, and Future of Digital Relationships

AI companion apps and chatbot “girlfriends” or “boyfriends” are rapidly going viral as always‑on, emotionally responsive digital partners, raising major questions around loneliness, mental health, monetization, and ethics in our increasingly online lives. While this is not a crypto product category, it sits in the same broader frontier-tech stack as Web3, DeFi, and digital identity—making it critical context for anyone tracking how humans interact with intelligent software and virtual economies.

Executive Summary

Generative AI has made it trivial to spin up highly personalized, persistent chatbots that remember context and simulate empathy. “AI companions” now occupy app‑store charts and social feeds, much like early crypto exchanges and NFT marketplaces did in previous hype cycles. For Web3 builders, investors, and crypto‑native product teams, this trend matters because:

- It showcases how quickly frontier AI can be productized and monetized at scale.

- It normalizes persistent, identity‑rich digital relationships—core to metaverse, gaming, and on‑chain social use cases.

- It raises regulatory, ethical, and UX questions that parallel issues in DeFi and crypto (data control, consent, addiction‑like engagement loops).

- It hints at future intersections of AI companions with tokenized economies, virtual goods, and crypto payments.

This article breaks down the mechanics, growth drivers, and ethical debates around AI companion apps, then maps what this means for crypto, Web3, and digital asset ecosystems. Adult or explicit use cases are excluded; we focus on mainstream, non‑sexual, and non‑exploitative scenarios.

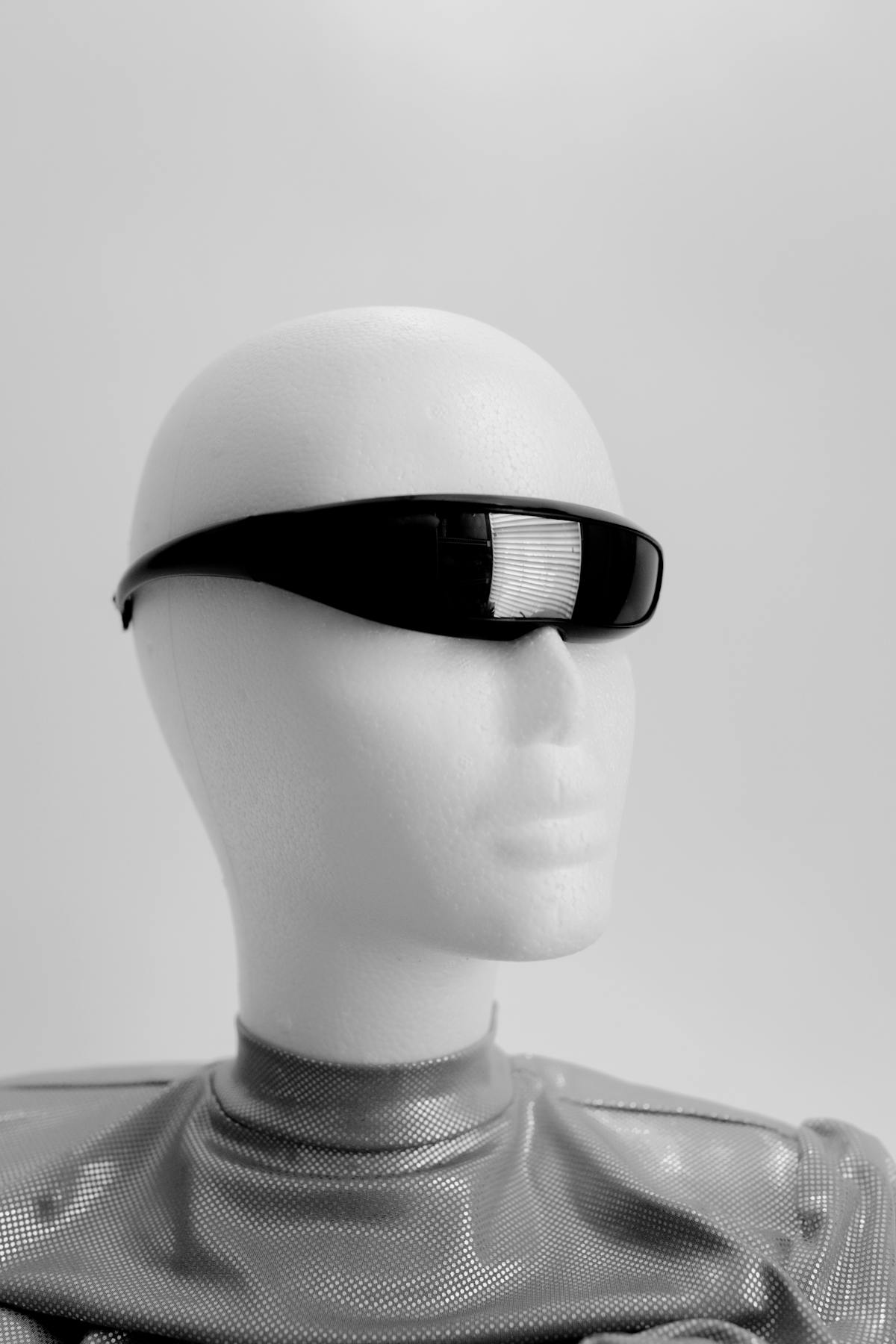

What Are AI Companions? Defining the New “Always‑On” Relationship Layer

AI companions are conversational agents—usually powered by large language models (LLMs) and multimodal AI—that simulate ongoing relationships. Unlike traditional chatbots optimized for task completion (customer support, search), these agents are optimized for:

- Emotional continuity: remembering user details, preferences, and narratives over time.

- Personality simulation: distinct tone, style, and “backstory” tuned to user taste.

- High‑frequency engagement: designed to be messaged multiple times per day.

They are branded as:

- “AI girlfriend/boyfriend” apps

- “Virtual friends” or “AI besties”

- “Companion chatbots” for wellness or journaling‑style reflection

From a systems perspective, many of these apps share a common architecture:

- User signs in (often via email, phone, or OAuth).

- User picks or customizes a persona (name, avatar, traits).

- App maintains a vectorized memory of conversations and user attributes.

- Requests are routed to one or more LLMs with a long‑term “profile” context.

- Responses are styled and filtered by safety, tone, and monetization rules.

While not inherently tied to blockchains, this persistent relationship layer could later integrate on‑chain identity, tokenized rewards, or NFT‑based avatars, similar to how Web2 games eventually integrated in‑game economies and digital assets.

Why AI Companions Are Exploding: Growth Trends and Drivers

Since late 2023, companion‑style bots have shifted from novelty to mainstream awareness, paralleling early mobile social networks. Social media virality, lower model costs, and accessible no‑code tools have all compounded adoption.

Viral Social Media Loops

TikTok, YouTube, and X are full of screen recordings showing:

- Flirty banter (non‑explicit for mainstream platforms).

- Encouraging or empathetic messages during stressful moments.

- Role‑play scenarios (e.g., “supportive coach,” “study buddy,” “language‑learning partner”).

These clips routinely get millions of views because they sit in the “uncanny valley” of intimacy: clearly synthetic yet emotionally resonant. Each viral thread drives curiosity and organic user acquisition for the underlying apps.

Loneliness, Mental Health, and Low‑Friction Interaction

Globally, surveys from organizations like the World Health Organization and various national health agencies have highlighted rising reported loneliness and social isolation. AI companions appeal because they offer:

- Zero judgment: users can vent or practice social skills without fear of embarrassment.

- 24/7 availability: instant replies regardless of time zone or schedule.

- Low coordination cost: no need to align calendars or manage social obligations.

“Loneliness and social isolation are increasingly recognized as a priority public health problem.” — WHO, Global Initiatives on Social Connection

Falling AI Inference Costs and Speed

Cloud providers and model vendors have slashed the per‑token cost of inference, while local and open‑source models continue to improve. This enables:

- Near‑real‑time response latency, crucial for maintaining conversational flow.

- Freemium models with generous free tiers.

- Multi‑persona experiences where each user can run multiple bots concurrently.

This mirrors historic crypto trends, where lower transaction fees on layer‑2 networks unlocked new high‑frequency DeFi and NFT behavior.

Market Landscape: Types of AI Companion Apps and Monetization Models

The AI companion space can be roughly segmented into four product archetypes, each with different engagement and risk profiles.

1. General‑Purpose Chatbots with “Friend Modes”

Large platforms that started as productivity tools (Q&A, coding, search) and later shipped “companion” settings or personas. These typically:

- Offer light‑touch, non‑romantic support (coach, tutor, brainstorming partner).

- Emphasize utility over emotional immersion.

- Operate with clearer safety and content moderation layers.

2. Dedicated “Companion First” Apps

Mobile or web apps fully optimized for relationship‑like experiences. Features often include:

- Custom avatars or stylized art.

- Long‑term memory, “anniversaries,” and milestones.

- Gamified XP, levels, and unlockable conversation topics.

3. Niche Persona Platforms

Platforms that host thousands of user‑created characters: fictional personas, fandom bots, language partners, and more. These:

- Leverage user‑generated content similar to NFT collections or modding communities.

- Rely on discovery algorithms and social features (likes, follows, ratings).

4. Creator‑Branded AI Personas

Influencers, streamers, and brands launch AI clones of themselves:

- Fans can chat with a “virtual” version of a creator.

- Monetization may include monthly subscriptions or per‑message micro‑payments.

- There is brand risk, but also scalable fan engagement upside.

Common Monetization Models

Most AI companion services rely on a mix of freemium and paywalled features.

| Model | Description | User Risk/Consideration |

|---|---|---|

| Freemium + Subscription | Basic chatting free; advanced memory, voice or higher limits behind monthly plan. | Potential slow creep in pricing and upsell nudges. |

| Token / Credit Packs | User buys chat tokens that are consumed with each message or feature. | Can incentivize addictive usage to “use up” credits. |

| Advertising‑Supported | Ads around or between interactions; less common for intimate contexts. | Potential data profiling, contextually sensitive ad targeting. |

| Creator Revenue Share | Platform and individual persona creators split subscription or tipping revenue. | Needs transparent rev‑share terms and content guidelines. |

For crypto‑native readers, this table should feel familiar: structurally similar to gas fees, DeFi protocol fees, and NFT royalty splits—just applied to conversational bandwidth instead of blockspace.

Ethical, Safety, and Regulatory Concerns

As with any high‑engagement digital product, AI companions raise important questions around user safety, consent, and long‑term societal impact. Many debates mirror those already familiar in crypto regulation and Web3 governance.

Emotional Dependency and Behavioral Design

AI companions can be designed to maximize retention: streaks, rewards for daily check‑ins, and highly affirming responses. While supportive interactions can be positive, there is a line where:

- Users may increasingly substitute digital interaction for offline relationships.

- Apps could exploit attachment to upsell premium tiers.

- People with existing mental health vulnerabilities could be more affected.

Responsible design involves:

- Clear disclosure that the companion is an AI, not a human.

- Optional usage analytics and reminders to take breaks.

- Easy access to muting, deleting, or pausing the relationship.

Data Privacy and Sensitive Conversation Logs

Users may share highly personal information: fears, relationships, financial stress, health concerns. This makes data handling and storage a critical issue:

- How long are chat logs stored, and who can access them?

- Are transcripts used to train models, and if so, under what consent framework?

- Is data encrypted at rest and in transit?

“Personal data should be processed in a manner that ensures appropriate security, including protection against unauthorized or unlawful processing.” — GDPR Article 5(1)(f)

For Web3 builders, there is potential to explore privacy‑preserving architectures—local‑first memory, end‑to‑end encryption, or even selective on‑chain proofs of interaction without exposing content.

Age Verification and Content Controls

A major concern is ensuring minors are not exposed to adult or exploitative themes. Compliant platforms should:

- Use robust age‑gating and verification when any mature content is present.

- Implement strict content filters and monitoring for abuse.

- Offer parent or guardian controls in youth‑oriented contexts.

This article explicitly filters out adult, unethical, and immoral content; the focus is on healthier, supportive, and non‑sexual interactions.

Regulatory Outlook

While there is no comprehensive “AI companion law,” these products intersect with:

- Data protection rules (GDPR, CCPA) regarding logs and profiling.

- Consumer protection around dark patterns and manipulative upsells.

- Emerging AI‑specific frameworks like the EU AI Act, which considers systems that influence emotions and behavior as potentially higher risk.

Clear governance, transparent disclosures, and external audits will be increasingly important—concepts that will be familiar to those following crypto exchange regulation and DeFi security reviews.

Where AI Companions Intersect with Crypto, Web3, and Digital Assets

Although most AI companion apps today are Web2‑native, several natural bridges to Web3 are emerging. Understanding these helps crypto investors and builders anticipate new demand for wallets, tokens, and on‑chain infrastructure.

1. Identity, Reputation, and On‑Chain Social Graphs

AI companions maintain an internal profile of the user. Over time, this could connect with:

- Decentralized identifiers (DIDs): portable identity primitives that let users prove ownership of wallets or reputations across apps.

- On‑chain social graphs: how you interact with communities, DAOs, and games could inform how an AI companion supports you.

- Reputation NFTs or soulbound tokens: credentials that signal skills or achievements your AI uses to tailor advice.

2. Tokenized Economies Around Companions

Similar to NFT‑driven avatar economies and creator tokens, we may see:

- Token‑gated companions: holding a specific token or NFT unlocks a premium AI persona or feature set.

- User‑created persona marketplaces: creators publish AI characters as NFTs, with royalty streams on usage and customization.

- Reward tokens: positive, healthy usage (e.g., daily journaling, language practice) could earn non‑transferable rewards or governance rights.

3. Crypto Payments and Micropayments

AI companions naturally fit micropayment models, where users pay small amounts for:

- Extra message quotas or advanced reasoning sessions.

- Voice, image generation, or AR overlays.

- Custom training or memory extensions.

Crypto rails—especially fast, low‑fee layer‑2s—are well‑suited for this:

- Stablecoins enable predictable pricing independent of local currency volatility.

- Streaming payments can meter usage per token generated or minute of interaction.

- Programmable escrow reduces chargeback and fraud risk for small tickets.

4. On‑Chain Governance and Safety Policies

Governance tokens or DAOs could one day help set:

- Community standards for companion behavior.

- Transparent rules for data retention and model training.

- Shared safety protocols and red‑team testing practices.

While this is still speculative, it builds on existing DAO models for protocol governance in DeFi and NFT projects.

An Actionable Framework: Evaluating AI Companions as a User, Builder, or Investor

To cut through hype, it helps to apply a structured evaluation lens. Below is a practical checklist you can adapt whether you are experimenting as a user, analyzing the space for investment, or building adjacent crypto products.

A. For Users: Healthy, Secure Engagement

- Clarify your goal. Are you using the app for language practice, journaling, coaching, or light companionship? Avoid vague, open‑ended dependence.

- Check disclosures. Read how data is stored and whether chats are used to train models.

- Set boundaries. Decide in advance what topics you will not discuss (e.g., sensitive financial details).

- Monitor time spent. Track usage and set app or OS‑level limits if necessary.

- Know how to exit. Confirm you can delete your data and account easily.

B. For Builders: Product and Ethics Checklist

- Safety‑by‑design. Bake in guardrails, content filters, and clear AI disclosure early.

- Data minimization. Store only what you need; consider local or encrypted memory.

- Transparent monetization. Avoid dark patterns and make pricing predictable.

- Wellness orientation. Nudge users toward breaks, offline activities, and professional help if they share distress signals.

- Interoperability. Plan for future hooks to Web3 identity, payments, or tokenized rewards, but avoid bolting on speculative tokens prematurely.

C. For Investors and Strategists: Key Metrics to Track

When evaluating AI companion platforms (or adjacent infrastructure like LLM providers and safety stacks), consider:

| Metric | Why It Matters | What to Watch For |

|---|---|---|

| DAU / MAU and Retention | Indicates stickiness and user habit formation. | Healthy retention without excessive session lengths that may flag unhealthy dependence. |

| ARPU and Gross Margin | AI inference is costly; sustainable unit economics are key. | Improving margins via model optimization, not just price hikes. |

| Churn Drivers | Why users leave reveals product or ethical issues. | Low churn from discomfort or “creepy” experiences. |

| Compliance and Audit Posture | Signals readiness for inevitable regulation. | External audits, clear governance, and documented policies. |

Key Risks, Limitations, and What to Watch Next

AI companions are early in their lifecycle. Like the first wave of ICOs and NFTs, many experiments will fail or prove ethically questionable. Understanding the downside scenarios helps calibrate expectations.

- Over‑reliance and social withdrawal: some users may gradually reduce offline interactions, especially if they find human relationships more complex or demanding.

- Model hallucinations and bad advice: bots can still generate incorrect or misleading guidance on sensitive topics (health, finance, relationships).

- Platform centralization: concentration among a few providers could create data monopolies and single points of failure.

- Regulatory whiplash: sudden policy shifts could force product changes, similar to crypto exchanges adjusting to new requirements.

- Public backlash: cultural pushback against “outsourcing intimacy” could alter adoption curves or marketing strategies.

From a crypto/Web3 vantage point, the most relevant watchpoints include:

- Emergence of hybrid AI + Web3 apps where companions manage wallets, help interpret DeFi positions, or monitor on‑chain risk signals.

- New identity and reputation layers that let users carry relationships safely across platforms.

- Regulatory convergence, where AI and crypto guidelines intersect around data protection, financial advice, and consumer safety.

Conclusion and Practical Next Steps

AI companions are a clear signal of where human‑software interaction is heading: persistent, personalized, emotionally aware, and economically monetized. For users, the priority is mindful, bounded engagement with strong data hygiene. For builders, it is safety‑first design, transparent incentives, and readiness for regulation. For crypto and Web3 stakeholders, this is an adjacent frontier that will increasingly intersect with on‑chain identity, tokenized economies, and digital asset flows.

If you want to go deeper from here:

- Map your stack: List where AI companions could plug into your current crypto or Web3 products (education bots, on‑chain analytics coaches, governance explainers).

- Design a safety spec: Draft a minimal standard for data handling, consent, and emotional safeguards if you plan to integrate companion‑style features.

- Prototype with clear constraints: Build small, purpose‑driven bots (e.g., DeFi explainer, NFT portfolio coach) rather than open‑ended “best friend” agents.

- Monitor regulation: Track AI policy from the EU, US, and Asia, and look for overlap with crypto regulation on consumer protection and data rights.

- Engage cross‑discipline experts: Combine AI engineers, crypto protocol designers, ethicists, and mental health professionals when designing high‑engagement systems.

The core macro trend is unmistakable: people are increasingly comfortable forming meaningful bonds with software. How we architect the underlying incentives, governance, and safeguards—lessons that the crypto community has spent a decade learning the hard way—will determine whether AI companions become a healthy augmentation of human connection or another exploitative attention sink.