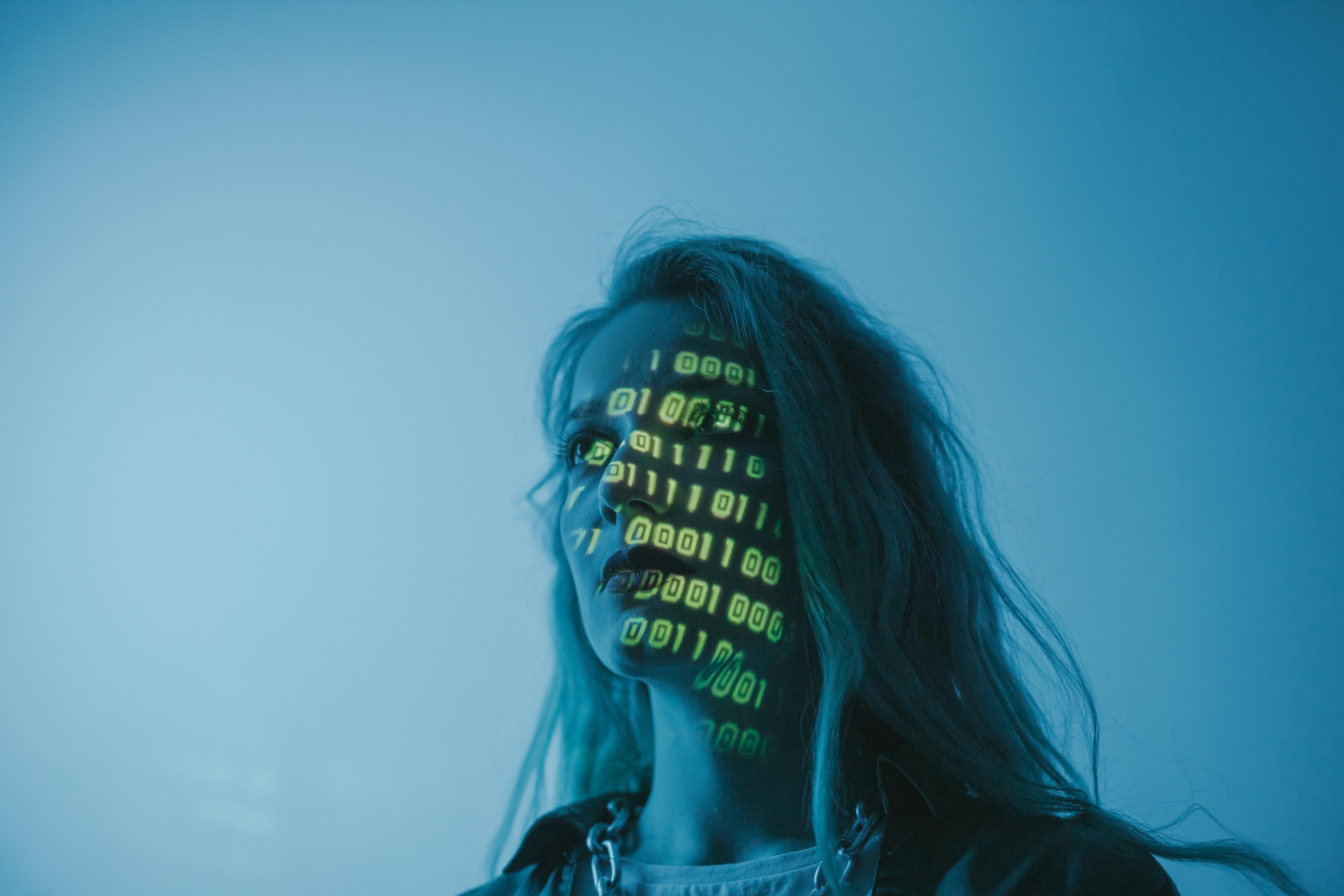

Open-Source vs Closed-Source AI: Who Will Control the Future of Intelligence?

The debate over open-source vs closed-source AI is rapidly becoming one of the central questions of the digital era: who gets to see, run, and modify the most advanced models, and under what terms? On one side are open communities pushing for transparency, forkability, and broad access to model weights. On the other are companies arguing that proprietary control is essential for safety, reliability, and the massive investment needed to train frontier systems.

This conflict touches everything from licensing and antitrust to cybersecurity, geopolitics, and scientific progress. Regulators are watching how a handful of firms control foundation models, GPU supply, and cloud infrastructure, while researchers and developers experiment with increasingly capable open models that can run on consumer hardware. The stakes are long-term: how this battle is resolved will influence who captures value from AI, who sets norms and guardrails, and whether smaller players and the public can meaningfully participate in the AI ecosystem.

Mission Overview: What Is at Stake in the Open vs Closed AI Divide?

At its core, the open-source vs closed-source AI debate is about balancing four competing imperatives:

- Innovation and speed – How quickly can the ecosystem iterate on new capabilities?

- Safety and security – How do we prevent misuse while enabling beneficial applications?

- Economic fairness and competition – Who captures the value produced by AI systems?

- Democratic access and control – Who gets to shape how AI is built and governed?

Open-source (or more precisely, open-weights) advocates argue that broad access is required for scientific rigor, independent evaluation, and resilience against monopolies. Proponents of closed-source AI counter that withholding weights, data, and training pipelines is necessary to prevent powerful models from being trivially repurposed for harmful uses and to recoup massive R&D and infrastructure costs.

“We are replaying the early internet vs walled garden fight, but with systems that can write code, design molecules, and manipulate information at scale.”

— Adapted from ongoing commentary by AI policy researchers across OpenAI, Anthropic, and academic labs

Licensing, “Open‑Washing,” and Model Access

The most visible fault line today is licensing. Not all “open” models are created equal, and many that are marketed as open actually sit somewhere between fully open-source and fully proprietary.

Truly Open vs Source-Available Models

A truly open-source AI model typically offers:

- Freely downloadable model weights

- Access to training code and often datasets or detailed data cards

- Permissive licenses allowing commercial and non-commercial use, modification, and redistribution (e.g., Apache-2.0, MIT, or OSI-compliant AI licenses)

By contrast, source-available or restricted models:

- May release weights but prohibit commercial use

- Impose usage caps, field-of-use restrictions, or limits on redistribution

- Often require API-based access for the most capable variants

This has triggered accusations of “open‑washing”, where marketing leans heavily on “open source” branding while legal terms retain strategic control. Analysts at publications like Ars Technica and The Verge routinely compare licenses and highlight gaps between branding and reality.

Why Licensing Details Matter

- Commercial viability – Startups and enterprises must know if they can ship products and raise capital on top of a given model.

- Longevity and dependence – Restrictive licenses can change or be revoked, reintroducing vendor lock‑in risks even when weights are published.

- Research freedoms – Academic and independent researchers often require explicit rights to modify, benchmark, and redistribute derivatives.

“In AI, the license is the real interface. If you don’t read it carefully, you don’t actually know what you’re building on.”

— Paraphrasing common guidance from open-source legal experts

Competition, Antitrust, and Market Power

Open models are aggressively reshaping the competitive landscape. In sector after sector, developers report that open alternatives are approaching or matching proprietary performance at far lower cost, especially for domain-specific tasks.

How Open Models Undercut Moats

- Fine-tuning on niche data – Teams can adapt general models to legal, medical, or industrial domains using relatively modest datasets.

- Inference on commodity hardware – Quantized and optimized open models can run efficiently on single GPUs or even high-end laptops.

- Transparent benchmarking – Open weights allow reproducible evaluations, reducing reliance on vendor marketing claims.

This dynamic concerns regulators in the US, EU, and elsewhere, who are already probing whether a small cluster of big tech firms dominates:

- Foundation models (general-purpose LLMs and multimodal systems)

- Cloud compute platforms (necessary to train and serve large models)

- AI accelerator supply chains (e.g., high‑end GPUs and networking)

Regulatory and Policy Questions

Competition authorities are exploring questions such as:

- Do exclusive partnerships between major cloud providers and leading AI labs foreclose rivals?

- Are “preferred ecosystems” (tooling, SDKs, proprietary benchmarks) steering developers into tightly controlled platforms?

- Could limiting open-source AI under the banner of “safety” inadvertently entrench incumbent power?

“The risk is that the next computing platform becomes more concentrated than the last. AI is too important to be captured by a tiny number of gatekeepers.”

— Adapted from commentary by competition economists and digital policy scholars

Security and Safety: Is Open or Closed AI Safer?

Security and safety debates around model openness are especially intense. There is no consensus, and credible arguments exist on both sides.

Arguments for Open Models Being Safer

- Transparency for auditing – Researchers can independently test for jailbreaks, prompt injections, bias, and failure modes.

- Community red‑teaming – A broad pool of experts can stress‑test systems more comprehensively than any single company.

- Reproducible safety research – Open access enables robust, peer‑reviewed alignment methods that do not depend on private APIs.

- Resilience to monopoly power – A diverse ecosystem reduces single points of failure and political or corporate capture.

Arguments for Closed Models Being Safer

- Controlled access – APIs allow rate limits, identity checks, and anomaly detection for misuse (e.g., mass phishing, spam, or harassment).

- Harder to repurpose – Without weights, attackers cannot easily fine‑tune models for malicious tasks or integrate them offline.

- Centralized patching – Model providers can roll out safety updates globally without waiting for community adoption.

Where the Disagreement Is Sharpening

Recent policy proposals and expert reports often focus on:

- Model weights vs access controls – Should weights for frontier models be considered sensitive dual‑use artifacts?

- Data governance – How much do we need to control the training data vs the model itself to mitigate harms?

- Compute thresholds – Should regulation trigger when training runs exceed certain FLOP or capability thresholds?

“Opacity is not the same thing as safety. But neither is radical openness a free lunch when capabilities are advancing this quickly.”

— Paraphrased from debates in AI safety and cybersecurity communities

Developer Ecosystem and Innovation Dynamics

For many practitioners, the most tangible aspect of the open vs closed divide shows up in daily engineering work: how easy is it to build, ship, and maintain AI-powered products?

Why Developers Gravitate Toward Open Models

- Self‑hosting and data control – Sensitive industries like healthcare, finance, and defense often prefer to keep inference within their own infrastructure.

- Customization – Teams can fine‑tune or adapt models to specific tasks, languages, or regulatory contexts.

- Cost predictability – Running optimized open models on dedicated hardware can be cheaper and more predictable than per‑token API pricing at scale.

- Avoiding vendor lock‑in – Open-weights models reduce dependency on the pricing, terms of service, or survival of any single provider.

Local and Edge Inference

YouTube, GitHub, and TikTok are full of tutorials showing how to run LLMs locally on:

- High‑end laptops with consumer GPUs

- Gaming PCs repurposed for model inference

- Compact edge devices for offline or low‑latency applications

These workflows often emphasize privacy (no data leaves the device) and ownership (no recurring subscription needed to keep using a model).

Complementary Hardware and Tools

For practitioners who want to experiment with local AI, consumer-grade GPUs remain a key enabler. For example, GPUs like the NVIDIA GeForce RTX 4070 can comfortably run many open models when properly optimized and quantized.

Developers also rely heavily on:

- Open-source serving stacks (e.g., text-generation frameworks, vector databases)

- Evaluation harnesses and benchmark suites

- Community-curated model hubs and documentation

Technology: Architectures, Training Pipelines, and Governance Layers

Both open-source and closed-source AI models typically share similar core architectures: transformer-based networks, mixture‑of‑experts layers for scaling, and multimodal encoders/decoders. The real differences emerge in:

- How training data is collected and filtered

- How alignment and safety layers are implemented

- How access and governance are enforced at deployment time

Training and Fine-Tuning Pipelines

A typical modern pipeline includes:

- Pretraining on large text and/or multimodal datasets, using self-supervised objectives.

- Instruction tuning on curated question–answer pairs and task demonstrations.

- Reinforcement learning from human feedback (RLHF) or related techniques to shape behavior.

- Safety tuning and red‑teaming to reduce harmful outputs and jailbreak susceptibility.

In the open-source ecosystem, many of these stages are documented in public repositories and papers, allowing others to iterate rapidly. Proprietary labs often keep detailed data curation and alignment recipes confidential, even when they publish high-level research.

Governance and Control Layers

On top of raw models, both camps are building governance stacks:

- Content filters for disallowed topics and categories

- Usage analytics to detect abuse patterns

- Policy engines that encode regional regulations or customer-specific rules

In closed systems, these layers are typically server‑side and centrally enforced. In open systems, filters and policies may be partially client-side or community-maintained, raising questions about consistency and accountability but enabling greater experimentation.

Scientific Significance: Reproducibility, Benchmarks, and Collective Intelligence

From the perspective of science and engineering, the openness of AI models has major consequences for how quickly and reliably the field advances.

Reproducible Research

When weights, code, and data are openly available, other groups can:

- Verify reported performance on public and private benchmarks

- Stress‑test generalization to new domains and languages

- Explore scaling laws and architectural variants

- Identify failure modes, biases, and emergent behaviors

Benchmark Inflation and Hidden Tricks

Closed models are sometimes accused of benchmark “gaming,” where performance on public leaderboards improves through aggressive prompt engineering, training on benchmark-like data, or hidden evaluation tricks that are difficult for outsiders to scrutinize. Open models, by contrast, allow:

- Transparent evaluation protocols

- Community-owned benchmark suites

- Independent replication by academic labs and companies

“AI progress depends on a culture of reproducibility. Without open artifacts, it becomes harder to separate genuine advances from marketing.”

— Echoing concerns from leading machine learning researchers

Milestones in the Open vs Closed AI Ecosystem

Over the last few years, several milestones have defined the trajectory of this debate.

Key Milestones and Trends

- Release of competitive open LLMs – A wave of open models demonstrated that community-driven efforts can rapidly catch up to proprietary leaders on many benchmarks.

- Open-source toolchain explosion – Frameworks for orchestration, retrieval-augmented generation (RAG), and evaluation made it easier to build products on top of open models.

- Regulatory scrutiny of big-tech AI deals – Governments began probing strategic partnerships between cloud providers and model labs.

- Emergence of AI safety consortia – Multi-stakeholder groups formed to share best practices and coordinate on evaluation, sometimes straddling both open and closed worlds.

Shifts in Developer Sentiment

Surveys and community activity on GitHub, Hacker News, and professional networks like LinkedIn indicate a strong appetite for:

- Hybrid architectures that combine open and closed components

- Transparent roadmaps and deprecation policies

- Models that can be self‑hosted today but still integrate with cloud APIs when needed

Challenges: Legal, Ethical, and Technical Frictions

Both open and closed ecosystems face serious, and often complementary, challenges.

Challenges for Open-Source AI

- Misuse risks – Highly capable open models can be adapted for disinformation, fraud, or malware assistance.

- Funding and sustainability – Maintaining state-of-the-art models and infrastructure is expensive; community projects must secure long-term support.

- Liability and governance – It is not always clear who is responsible when an open model is fine-tuned and misused by third parties.

- Coordination on safety standards – Without centralized control, consistency in safety and content filtering is harder to achieve.

Challenges for Closed-Source AI

- Trust and verification – Users and regulators must largely take performance and safety claims at face value.

- Concentration of power – Centralized control over capabilities, data, and compute risks entrenching monopolies.

- Innovation bottlenecks – Gatekeeping can slow down experimentation and niche applications that do not fit a provider’s roadmap.

- Global equity – High API costs and data export restrictions can exclude low-resource regions and institutions.

Open Legal and Ethical Questions

As the AI stack matures, several unresolved questions grow more pressing:

- How should intellectual property law treat models trained on copyrighted or proprietary data?

- What constitutes due diligence for safety before releasing open-weights models?

- How can we design governance regimes that are both globally interoperable and locally accountable?

Visualizing the Open vs Closed AI Landscape

Practical Guidance for Organizations Choosing Between Open and Closed AI

Most organizations do not need to be ideological about openness. Instead, they can evaluate trade‑offs pragmatically for each use case.

Key Evaluation Questions

- Data sensitivity – Do regulations or internal policies require strict control over where data is processed?

- Latency and availability – Are low-latency or offline capabilities important (e.g., industrial controls, field operations)?

- Customization depth – How heavily must the model be adapted to proprietary workflows or terminology?

- Total cost of ownership – Does expected usage volume justify investing in in-house hosting and optimization?

- Risk tolerance – How much legal and reputational risk can the organization absorb as the regulatory environment evolves?

Hybrid Strategies

Many teams increasingly adopt hybrid architectures:

- Use closed APIs for frontier capabilities where safety and reliability are paramount.

- Deploy open-weights models on-premises for sensitive data, specialized tasks, or cost optimization.

- Implement central policy and logging layers that apply consistently across both open and closed components.

Complementary tooling—such as high-quality input devices, secure workstations, and GPUs—can significantly improve developer productivity. For instance, professionals who spend long hours iterating on prompts and experiments may benefit from an ergonomic keyboard like the Logitech MX Mechanical Wireless Keyboard, which is popular in developer communities for its comfort and reliability.

Conclusion: Toward a Pluralistic AI Ecosystem

The open-source vs closed-source AI debate is not likely to produce a single “winner.” Instead, we are trending toward a pluralistic ecosystem in which:

- Open models drive experimentation, education, and independent scrutiny.

- Closed models deliver tightly managed products and frontier capabilities under controlled conditions.

- Hybrid frameworks allow organizations to mix and match components according to risk, cost, and performance needs.

The most constructive path forward is less about choosing sides and more about:

- Establishing clear, interoperable safety and transparency standards.

- Ensuring that competition policy prevents excessive concentration of power.

- Creating responsible pathways for open research and community innovation.

- Investing in education so that policymakers, businesses, and the public can understand the trade‑offs.

The outcome of this struggle will determine whether AI becomes an infrastructure that many can shape and benefit from, or one controlled by a small number of powerful institutions. Staying informed about licensing, governance, and ecosystem dynamics is now as essential for technical leaders as understanding model architectures or benchmark scores.

Additional Resources and Further Reading

To explore this topic in more depth, consider the following types of resources:

- Long-form journalism from outlets like Wired, Ars Technica, and The Verge on AI licensing and competition.

- Technical reports and policy briefs by academic labs, AI safety organizations, and standards bodies.

- Developer tutorials on YouTube demonstrating local model deployment and optimization.

- Discussions on professional platforms such as LinkedIn articles and engineering blogs that share real-world benchmarks and cost analyses.

As capabilities continue to accelerate beyond 2026, regularly revisiting these debates—and updating organizational strategies accordingly—will be critical to using AI responsibly and competitively.

References / Sources

The following representative sources provide additional context and analysis related to licensing, competition, safety, and open vs closed AI ecosystems: