Why Spatial Computing and Mixed Reality Headsets Are Finally Ready for Real Work

Spatial computing describes the blending of digital content with the physical world, typically through mixed reality (MR) or extended reality (XR) headsets that understand your room, your hands, and your gaze. After the metaverse hype of the early 2020s fizzled, a new wave of devices in late 2024 and 2025 — from Apple, Meta, HTC, Lenovo, and others — has brought the conversation back, this time with a sharper focus on sustained use rather than flashy demos.

Reviews from outlets such as The Verge, Engadget, TechRadar, Ars Technica, and Wired increasingly evaluate these headsets not as toys, but as serious computers: Can they replace a laptop screen? Do they support all‑day work? How do developers ship cross‑platform XR apps without rewriting everything?

At the same time, YouTube creators and TikTok clips show “day in the life with a headset” workflows, from editing videos in floating windows to playing mixed reality fitness games that adapt to your real living room. This convergence of media, developer interest, and enterprise trials suggests spatial computing is entering its experimental but durable phase — much like early smartphones around 2008–2010.

Mission Overview: What Spatial Computing Aims to Achieve

The core mission of spatial computing is to treat space itself as a computing surface. Instead of apps being confined to a 2D screen, windows, tools, and data are anchored to the world around you — your desk, your wall, your factory floor, your operating room.

- Persistent digital workspaces that remember where your virtual screens “live” in your home or office.

- Context-aware interfaces that change tools based on what you look at or physically interact with.

- Collaborative mixed reality rooms where remote colleagues share the same spatial layout of whiteboards, 3D models, and notes.

- Task-specific 3D experiences — pre-surgical planning, factory layout optimization, architectural walkthroughs — that are much harder to understand on a flat monitor.

“We’re moving from computing that fits in your pocket to computing that fits around your world.” — Adapted from long‑running mixed reality research discussions in human–computer interaction.

Technology: How Modern Mixed Reality Headsets Actually Work

The leap from early VR to today’s mixed reality comes from a stack of interlocking technologies: high‑density micro‑OLED or LCD panels, low‑latency passthrough cameras, advanced on‑device processors, and sophisticated spatial sensing. Together, these components make it possible to overlay sharp, correctly‑lit virtual objects onto your real environment with far less motion sickness or visual fatigue than early headsets.

Core Hardware Building Blocks

- Displays: 4K‑per‑eye (or higher) displays are increasingly common in premium headsets, dramatically improving text readability for coding, writing, and spreadsheet work.

- Pancake lenses: Thinner, lighter optics that replace bulky Fresnel lenses, reducing weight and visual artifacts.

- Passthrough cameras: High‑resolution, color‑accurate cameras with depth estimation provide a believable view of the real world behind digital overlays.

- Inside‑out tracking: On‑board cameras track your head, hands, and controllers without external base stations, making headsets easier to set up and portable.

- Eye and hand tracking: Eye tracking enables “foveated rendering,” where only the region you’re directly looking at is rendered in full resolution, greatly improving performance. Hand tracking replaces controllers in many productivity scenarios.

- Spatial audio: Precise 3D audio cues make virtual objects feel anchored in space and help users locate sources of information by sound alone.

Software and Developer Tooling

On the software side, spatial computing relies heavily on game engines and specialized SDKs:

- Engines like Unity and Unreal Engine provide XR templates, physics, lighting, and cross‑platform build pipelines.

- Platform SDKs expose hand tracking, eye tracking, spatial anchors, and passthrough video. Developers can query the geometry of your room or track specific surfaces like tables and walls.

- WebXR APIs in modern browsers allow lighter‑weight, browser‑based XR experiences that don’t require app store installs.

“The hardest problem in XR isn’t drawing polygons — it’s designing interactions that feel natural in 3D.” — Common refrain among XR developers on forums like Hacker News and GDC talks.

Beyond Gaming: Spatial Computing for Productivity and Collaboration

A defining feature of the 2024–2025 wave is the shift from “VR as gaming console” to “MR as spatial computer.” Instead of just playing games, users are writing code, managing projects, and designing 3D models in immersive environments that behave like multi‑monitor setups floating around their desk.

Use Cases Emerging in the Wild

- Virtual multi‑monitor offices — Developers pin three to five large virtual screens around their desk, with terminal windows, documentation, and IDEs all visible at once.

- Design and 3D modeling — Architects and artists manipulate CAD models or sculpts directly in 3D space, naturally walking around objects and inspecting details.

- Remote whiteboarding — Distributed teams gather around shared 3D canvases, annotate diagrams, and manipulate data visualizations in real time.

- Code visualization — Experimental tools map software architectures into 3D graphs, letting engineers explore dependencies as spatial structures.

Long‑form YouTube videos, such as “week of work on a headset” vlogs, document both the benefits (focus, vast screen real estate, novel workflows) and the trade‑offs (comfort, battery life, text clarity). The emerging consensus is that spatial computing is highly effective for specific kinds of deep work but not yet a full laptop replacement.

Gaming and Immersive Media: Mixed Reality as a Playground

While productivity gets the headlines, gaming and immersive media continue to push the boundaries of what mixed reality can do. High‑budget titles and inventive indie games now blend digital and physical environments, turning your living room into an interactive stage.

Trends in XR Gaming

- Room‑scale MR puzzle games where virtual mechanisms are attached to real furniture and walls.

- Fitness and movement apps that adapt workout intensity and movement patterns to your available physical space.

- Narrative experiences that use your actual home layout as part of the story, delivering prompts and characters that “know” where you are.

- Shared MR social spaces where avatars inhabit a hybrid of your room and a virtual environment, letting friends join no matter where they live.

“The best mixed reality games aren’t trying to escape your living room — they’re trying to enchant it.” — Paraphrased from reviews on Engadget and The Next Web.

Social media platforms like TikTok and Instagram are amplifying these experiences through short MR clips, where virtual creatures perch on real sofas or game elements scatter across actual floors. This casual, shareable content helps mainstream audiences understand what spatial computing looks like without needing to try a headset themselves.

Enterprise and Industrial Adoption: Where XR Delivers Immediate Value

Perhaps the strongest signal that spatial computing is moving beyond hype comes from enterprise and industrial deployments. Unlike consumer markets, these sectors can justify high‑end headsets if they deliver measurable efficiency, safety, or training benefits.

Key Enterprise Scenarios

- Remote assistance and field service

- Technicians wear a headset while experts see their field of view remotely.

- Instructions, arrows, and part numbers are overlaid directly on real machinery.

- Digital twins of factories and infrastructure

- Operators visualize real‑time sensor data on top of 3D models of their facilities.

- Teams simulate layout changes before physically moving heavy equipment.

- Healthcare and surgical planning

- Surgeons rehearse operations using patient‑specific 3D reconstructions.

- Medical students train with interactive anatomy models and guided procedures.

- Immersive education and training

- Complex procedures — from power‑plant safety to aircraft maintenance — are practiced in realistic, repeatable virtual scenarios.

“For factories and hospitals, the ROI case for mixed reality is far clearer than for living rooms.” — Analysis echoed across Wired and Ars Technica coverage.

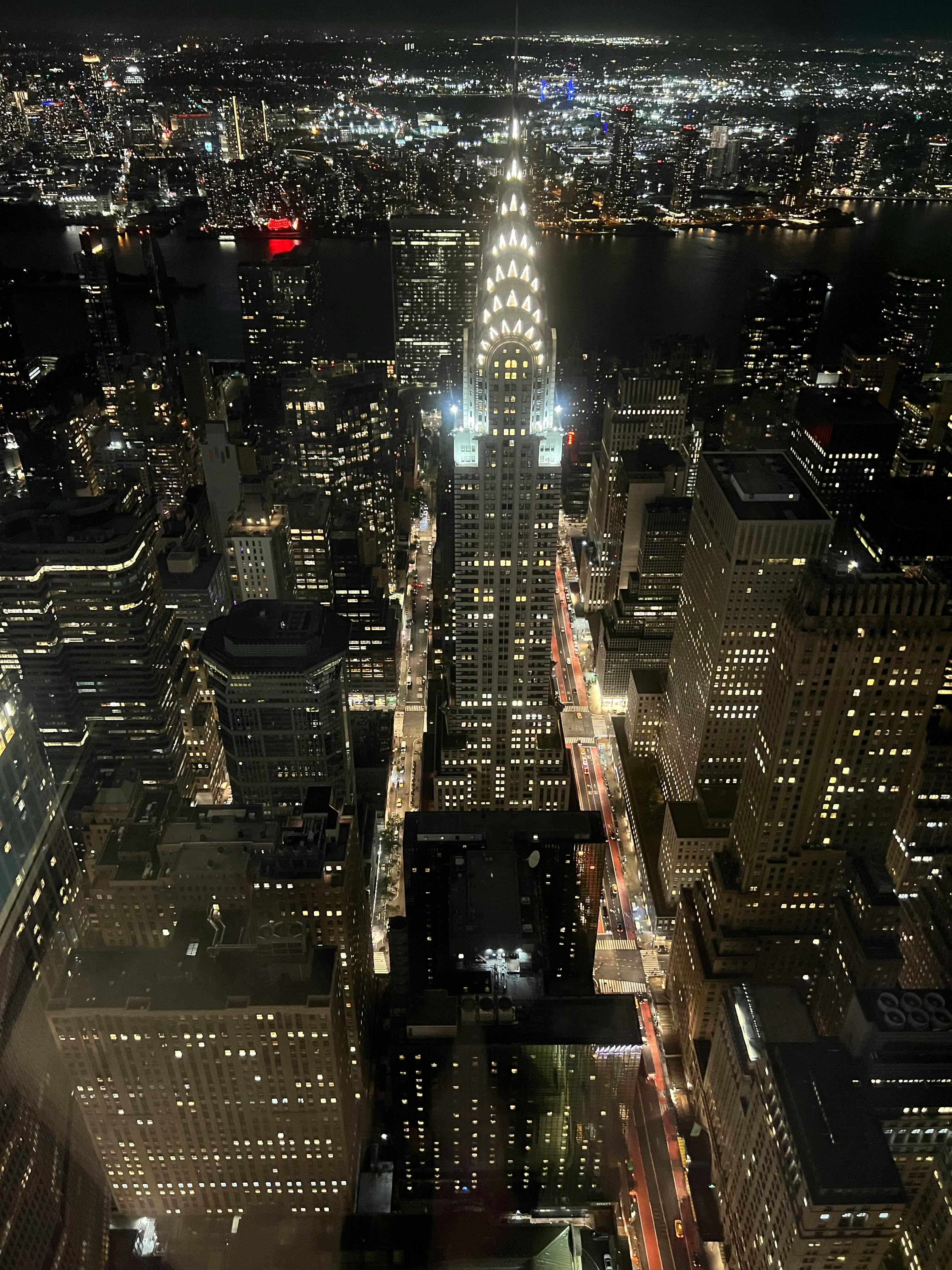

Visualizing Spatial Computing: Example Imagery

Scientific and Human–Computer Interaction Significance

Spatial computing isn’t just a gadget story; it’s a major frontier in human–computer interaction (HCI), perception science, and ergonomics. Researchers study how depth cues, field of view, locomotion techniques, and interaction metaphors affect comfort, performance, and understanding.

Key Research Themes

- Perceptual comfort — Balancing stereoscopic depth with vergence‑accommodation conflict to reduce eye strain and headaches.

- Embodied interaction — Leveraging natural gestures, postures, and locomotion while avoiding fatigue (the “gorilla arm” problem).

- Cognitive load — Determining how much spatial information users can process without becoming overwhelmed.

- Accessibility — Making XR usable for people with low vision, mobility limitations, or sensory sensitivities, aligned with WCAG 2.2 and related guidelines.

“Spatial interfaces demand that we redesign decades of 2D UI patterns from first principles.” — A recurring theme in HCI conference papers and XR research forums.

Milestones: From Early VR to the 2024–2025 Spatial Computing Wave

The current momentum in spatial computing builds on more than a decade of iterative progress. Some notable milestones include:

- 2012–2016: Early consumer VR

- Oculus Rift, HTC Vive, and PlayStation VR make high‑quality VR available to enthusiasts.

- Focus is largely on seated or room‑scale gaming, with limited pass‑through or mixed reality.

- 2016–2020: AR glasses and early MR

- Devices like Microsoft HoloLens and Magic Leap introduce optical see‑through MR for enterprise.

- Constraints include narrow field of view, high cost, and limited app ecosystems.

- 2020–2023: Standalone headsets and the metaverse hype

- Standalone VR headsets proliferate, popularizing inside‑out tracking and wireless use.

- “Metaverse” narratives peak but often overpromise relative to available experiences.

- 2024–2025: Spatial computing reframed

- Mixed reality headsets emphasize productivity, hybrid work, and industrial use cases.

- Media coverage shifts from hype to nuanced assessments of long‑term platform potential.

Challenges: Barriers Between Hype and Everyday Reality

Even with striking progress, spatial computing still faces serious obstacles. Thoughtful coverage from tech media and researchers emphasizes that XR is a long‑term bet, likely to take years of refinement before it becomes a universal tool.

Technical and Human Factors

- Comfort and ergonomics — Headsets must become lighter, better balanced, and more adjustable for different head shapes and hairstyles.

- Visual strain — Prolonged use can cause eye fatigue, especially if resolution, contrast, or optical alignment are sub‑optimal.

- Battery life and thermals — All‑day wear is unrealistic on most current devices without tethers or external batteries.

- Input design — Hand tracking and voice are promising but still inconsistent in complex, noisy environments.

Social, Ethical, and Privacy Concerns

- Always‑on cameras and sensors capturing environments and bystanders raise privacy questions at home, in offices, and in public spaces.

- Social acceptance — Wearing headsets in public still carries a stigma; eye contact and facial cues are partially obscured, affecting communication.

- Data governance — Sensor logs, gaze data, and biometrics are highly sensitive and must be handled with strict security and consent frameworks.

“The metaverse didn’t die — it just got quieter and more practical.” — A sentiment common in Recode‑style analyses of XR’s post‑hype evolution.

How Enthusiasts and Professionals Can Get Started

For individuals curious about spatial computing, the best path is incremental: start with focused use cases rather than trying to live entirely in a headset.

Practical Steps

- Define your goal — Are you interested in gaming, 3D creation, remote collaboration, or prototyping XR apps?

- Choose a headset class

- Standalone MR headsets for convenience and mixed reality gaming or casual work.

- PC‑tethered headsets for maximum graphical fidelity and advanced creation tools.

- Experiment with spatial workflows

- Try virtual desktop apps to extend or temporarily replace your monitor.

- Use MR whiteboarding tools for brainstorming sessions.

- Follow best practices

- Limit session length initially and take regular breaks.

- Optimize lighting and room setup for safe movement and reliable tracking.

If you want to understand how creators use headsets for real work, in‑depth YouTube channels focused on VR/MR productivity and LinkedIn posts from XR developers offer candid, experience‑based insights that go beyond marketing materials.

Learning, Tools, and Recommended Resources

A rich ecosystem of tutorials, research papers, and developer forums has emerged around XR and spatial computing. For those building or evaluating XR solutions, it’s worth exploring both practical guides and academic work.

Learning Resources

- Game engine documentation and XR developer portals for hands‑on SDK examples.

- HCI and VR conferences (e.g., ACM CHI, IEEE VR) for cutting‑edge research papers.

- Social platforms like LinkedIn and X (Twitter) for insights from XR designers and engineers sharing real‑world lessons.

For readers who want to complement headset‑based work with physical ergonomics and productivity, related hardware like adjustable laptop stands, ergonomic keyboards, and blackout curtains can help control lighting and posture in XR‑heavy workspaces.

Conclusion: A Long-Term, Incremental Shift in How We Compute

Spatial computing is not replacing phones and laptops overnight, and the industry has learned from the over‑promising of early metaverse narratives. Instead, 2024–2025 is shaping up as a period of careful experimentation and targeted deployment, where headsets become one more tool in a broader computing toolkit.

Hardware is finally good enough for serious work; software is catching up with better engines, SDKs, and UX patterns; and enterprises are validating real‑world value. At the same time, challenges in comfort, privacy, and social norms remain substantial. The most realistic outlook is that mixed reality will find durable, high‑value niches — from industrial training to creative workspaces — and slowly expand as the technology and culture mature.

For individuals and organizations, the most productive mindset is exploratory rather than utopian: identify a few tasks where depth, immersion, and spatial context genuinely help, and iterate from there. If the history of personal computing is any guide, today’s experimental spatial workflows may become tomorrow’s default way of working.

References / Sources

Below are selected sources and further reading for deeper exploration of spatial computing and mixed reality. All links were active as of late 2025:

- The Verge – XR and Virtual Reality Coverage

- Engadget – VR and Mixed Reality Articles

- TechRadar – VR Headsets and Mixed Reality Guides

- Ars Technica – Gaming and XR Features

- Wired – Virtual and Mixed Reality Reporting

- Meta / Oculus – Developer Resources

- Unity – XR Development Solutions

- Unreal Engine – Extended Reality (XR) Overview

- W3C – WebXR Device API Specification

- W3C – Web Content Accessibility Guidelines (WCAG) 2.2

As spatial computing evolves, staying informed via a mix of technical documentation, critical journalism, and practitioner case studies will help you separate durable progress from temporary hype — and choose where XR genuinely belongs in your own workflows.