Open-Source vs Closed-Source AI: Inside the Next Great Platform War

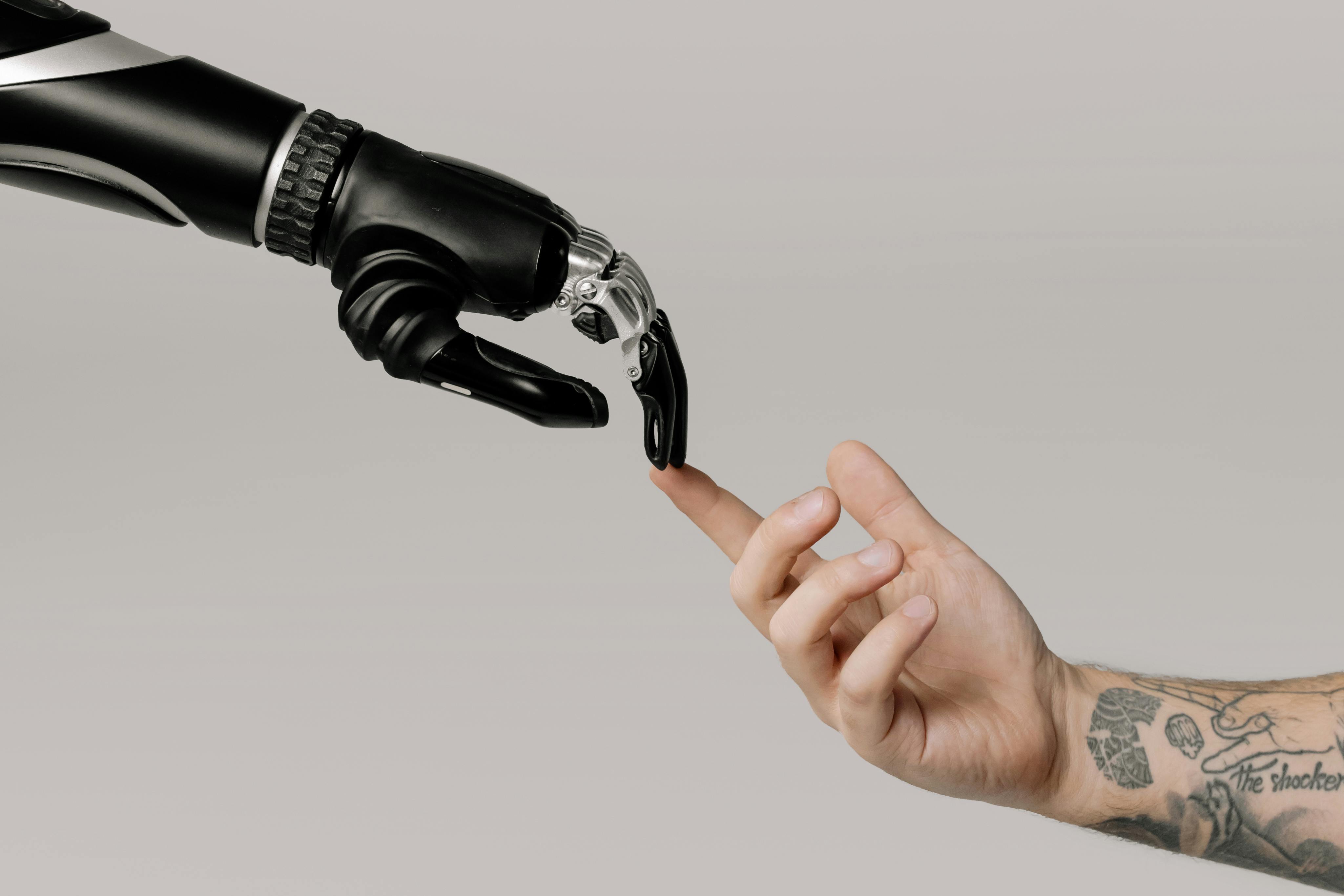

The rivalry between open-source and proprietary AI has become the defining “platform war” of the 2020s, echoing earlier battles between Windows and Linux, iOS and Android, and Chrome and Internet Explorer. But this time the stakes are higher: AI models are not just apps or operating systems—they are decision engines embedded in finance, healthcare, national security, education, and the creative industries. Understanding how this split emerged, and where it is heading, is now a strategic necessity for developers, enterprises, and policymakers.

At the center of the debate is a trade-off triangle: capability, control, and safety. Closed-source AI systems promise cutting‑edge performance and integrated cloud features, but require deep trust in vendors. Open-source models maximize transparency, portability, and experimentation, but raise difficult questions about misuse, responsibility, and long‑term sustainability.

This article breaks down the open vs. closed AI contest in six dimensions—technical architecture, economics, regulation, security, innovation, and long‑term governance—and offers practical guidance on how to navigate an increasingly polarized landscape.

Mission Overview: What Is the “Next Platform War” in AI?

In platform terms, AI models are becoming the new “runtime” for software. Instead of writing fixed logic, developers increasingly orchestrate prompts, tools, and fine-tuned models. The question is: whose platform is it?

Two Competing Models of Control

- Closed-source AI platforms are dominated by large technology vendors and well-funded startups. These providers offer:

- Frontier‑scale models with state-of-the-art benchmarks

- Integrated cloud APIs, storage, vector search, and orchestration tools

- Enterprise features like SLAs, SOC 2/ISO 27001 compliance, and legal indemnities

- Open-source AI ecosystems are driven by communities, labs, and smaller companies. They emphasize:

- Downloadable or self-hostable models under open or source‑available licenses

- Hardware flexibility—from gaming GPUs to on-device mobile and edge

- Modifiability: custom fine‑tuning, adapter layers, and domain-specific variants

“The key question is no longer whether open models can catch up, but how society will manage the consequences when they do.” — Anonymous AI researcher quoted in recent ML safety workshops

By late 2025, both camps are viable for serious work. The real competition is not about “which is better” in the abstract but “which is better for which workload, which risk tolerance, and which business model?”

Technology Landscape: How Open and Closed AI Differ Under the Hood

Model Scale, Architecture, and Capabilities

Closed‑source providers still dominate the extreme frontier layer—multi‑trillion‑parameter or highly optimized architectures trained on petascale datasets. These models often lead on:

- Multimodal reasoning (text, images, code, sometimes audio and video)

- Very long context windows (hundreds of thousands of tokens or more)

- Specialized tools: code assistants, research copilots, CAD/design helpers

Open-source models have rapidly closed the gap at the small and medium scale:

- Compact models that run on a single consumer GPU or even high‑end laptops

- On‑device variants optimized for smartphones and local PCs

- Task‑specific fine‑tunes that match or beat larger closed models on narrow benchmarks

Inference, Latency, and Deployment Patterns

In closed systems, inference usually happens in vendor clouds with highly optimized runtimes (e.g., kernel fusion, tensor parallelism, specialized accelerators). Open models are:

- Self‑hosted in private data centers or VPCs

- Run at the edge (factories, vehicles, robotics)

- Deployed on user devices for privacy‑sensitive or offline scenarios

Hybrid architectures are becoming common: a lightweight open model handles routine queries locally, while calls requiring deep reasoning or multimodal capabilities are routed to a closed, frontier API.

Security and Safety: Transparency vs. Containment

Arguments for Open Security

Open-source proponents argue that security through transparency is more robust over the long term. With open weights and code:

- Independent researchers can audit training data, architectures, and safety layers.

- External red‑teams can probe for prompt injection, jailbreaks, and data leakage.

- Organizations can apply their own content filters, guardrails, and monitoring systems.

“Open source is the driving force of innovation in AI.” — Yann LeCun, Meta Chief AI Scientist

Concerns About Open Misuse

However, regulators and safety researchers worry that fully open, high‑capability models could:

- Lower the barrier for large‑scale disinformation and deepfake campaigns

- Enable automated cyber‑offense, including code exploitation tooling

- Accelerate dangerous bioscience or chemical misuse when paired with other data

As a result, we see emerging middle grounds:

- Source‑available licenses with usage restrictions on certain domains

- Tiered access (research vs. commercial vs. high‑risk use cases)

- “Responsible release” frameworks where capability and risk assessments shape how and when weights are published

Closed Models: Safety by Policy and Perimeter

Closed-source vendors often emphasize:

- Centralized monitoring and abuse detection across their user base

- Liability frameworks and compliance certifications for enterprise customers

- Ability to withdraw or patch capabilities quickly when new risks appear

The trade‑off is that external experts must largely trust vendor disclosures about safety, as the underlying weights and full datasets remain opaque.

Regulation and Compliance: Who Is Responsible?

As AI regulations mature—especially in the EU, UK, and parts of Asia—the notion of a “model lifecycle” is crystallizing. Regulators distinguish between:

- Foundation model providers — those who train and release base models

- Fine‑tuners and adapters — those who customize models for specific tasks

- Deployers/operators — those who integrate models into products used by end‑users

Open Source: The Attribution Puzzle

For open models, the compliance chain is complex:

- Who is liable if a community fine‑tune introduces harmful behavior?

- How do you track provenance across forks and merged checkpoints?

- Can a small open‑source group realistically comply with extensive regulatory reporting?

Proposals include:

- Model cards and system cards that travel with checkpoints and document intended uses, training data, and known limitations

- Standardized capability and risk labels for open models

- Clearer assignment of duties to deployers as the last stage before user contact

Closed Models: Vendor as Regulated Entity

Closed-source providers are increasingly treated as regulated entities themselves, especially when they offer general‑purpose models. They must:

- Document training processes and safety evaluations to regulators

- Offer contractual commitments around data handling and model behavior

- Provide auditability mechanisms for large enterprise and government clients

For enterprises looking to minimize compliance overhead, delegating these responsibilities to a major vendor is often attractive—at the cost of deeper dependence on that vendor.

Economics and Lock‑In: Cost, Control, and the New Cloud Trap

Total Cost of Ownership (TCO) Comparisons

At first glance, open models appear “free” and proprietary APIs “expensive.” In reality, the calculus is subtler:

- Open-source costs:

- GPU or accelerator infrastructure (capex or cloud)

- Ops and MLOps teams for deployment, scaling, and monitoring

- Ongoing fine‑tuning, evaluation, and security maintenance

- Closed-source costs:

- Usage‑based pricing per token or per call

- Premium tiers for enterprise-grade features

- Potential “tax” on all higher‑level services (agents, vector stores, etc.) bundled into the same ecosystem

For early‑stage startups, proprietary APIs can be cheaper in the short term—no infra or ops team required. For large, steady‑state workloads, especially with privacy concerns, self‑hosting open models can become more economical over time.

Lock‑In Risks and Negotiation Leverage

Enterprises have learned from the first wave of cloud adoption. Many now:

- Adopt a multi‑vendor AI strategy with at least one open model provider in the mix

- Standardize around model‑agnostic orchestration layers (e.g., LangChain, LlamaIndex, custom abstractions)

- Insist on data portability and export guarantees at the contract level

“If AI becomes the ultimate lock‑in layer, customers will push back by demanding open interfaces and interoperable models.” — Paraphrased from industry analyst commentary on enterprise AI strategies

Tools for Cost-Aware Builders

Developers and small teams increasingly combine open and closed tools. For example:

- Use a GPU workstation or small on-prem cluster to experiment locally with open models; products like the NVIDIA GeForce RTX 4090 make single‑node experimentation surprisingly powerful.

- Route production traffic through a gateway that can swap between open and proprietary backends based on latency, cost, and capability.

Innovation Pace: Can Open Models Keep Up?

Replication and Leapfrogging

A consistent pattern since 2023 has been:

- A closed provider releases a breakthrough capability (e.g., longer context, a new multimodal feature, or powerful code completion).

- Within months, open communities replicate or approximate that capability with smaller, more efficient architectures.

- Tooling around open models—quantization, distillation, adapters—makes those capabilities widely accessible on commodity hardware.

This dynamic is particularly strong in:

- Vision‑language models (VLMs) for image description, OCR, and document understanding

- Code models used in IDEs and CI pipelines

- Small language models (SLMs) tuned for chat, retrieval‑augmented generation (RAG), and agents

Frontier vs. Ecosystem Innovation

Training frontier‑scale models still requires:

- Massive capital and long GPU reservations

- Highly specialized engineering for training pipelines

- Extremely large and sometimes proprietary datasets

That gives large firms an edge at the very top of the capability curve. But much of the day‑to‑day innovation that matters to developers—tools, frameworks, benchmarks, integrations—occurs in the open.

Academic and Civic Research

Open models are crucial for independent research in:

- Algorithmic fairness

- Robustness and adversarial testing

- Interpretability and mechanistic understanding

Without open weights, many of these investigations would be impossible or limited to synthetic experiments.

Milestones: Key Moments in the Open vs. Closed AI Contest

While the specifics continue evolving, several milestone patterns are clear by late 2025:

1. The Rise of Community‑Driven LLMs

Successive generations of open models demonstrated that:

- Community fine‑tuning and instruction training can dramatically boost capability.

- Efficient architectures and quantization enable “good enough” performance on consumer devices.

- Open checkpoints can spawn entire ecosystems of specialized derivatives within weeks of release.

2. Enterprise‑Grade Open Offerings

Open‑source‑friendly companies began offering:

- Managed hosting for popular open models

- SLAs, logging, observability, and compliance tooling

- Consulting and support contracts—mirroring the Linux business model

3. Regulatory Recognition of Foundation Models

Legislative efforts in the EU and elsewhere formally introduced “foundation model” categories, along with obligations for systemic risk assessment, transparency, and incident reporting, affecting both open and closed providers.

Challenges: What Could Go Wrong for Each Camp?

Challenges for Open-Source AI

- Sustainability: Maintaining cutting‑edge open models is expensive; funding and governance models must mature.

- Fragmentation: Many incompatible forks, licenses, and APIs can confuse users and slow adoption.

- Misuse and perception: High‑profile abuse cases could trigger heavy‑handed regulation targeting open ecosystems.

Challenges for Closed-Source AI

- Trust deficit: Opaque training data, safety claims, and bias mitigation can erode public trust.

- Regulatory and antitrust scrutiny: Concentrated control by a few firms invites legal and political pressure.

- Customer backlash: Enterprises may resist new forms of lock‑in, favoring open alternatives where feasible.

“The future of AI will likely be neither fully open nor fully closed, but a layered ecosystem where different degrees of openness coexist.” — Paraphrased from multiple policy and technical experts in AI governance forums

Practical Guidance: Choosing Between Open and Closed for Your Use Case

Key Questions to Ask

- What are my capability requirements? Do I truly need frontier‑level performance, or will a mid‑tier open model suffice?

- What are my privacy and data residency constraints? Can data leave my infrastructure?

- What is my forecasted volume? At what scale does self‑hosting become cheaper than API calls?

- What compliance regime applies? Healthcare, finance, and government may push toward self‑hosting or vendors with strong certifications.

- What is my team’s expertise? Do I have the in‑house skills to operate models reliably?

Common Patterns in 2025

- Hybrid stack: Open models for noncritical or internal workflows; closed frontier models for customer‑facing, high‑stakes features.

- RAG‑first design: Combine a moderately capable model (open or closed) with strong retrieval over your own data to reduce dependence on ultra‑large models.

- Abstraction layers: Design APIs so you can swap model backends with minimal code changes.

For developers and architects looking to deepen their skills, resources like the “Designing Machine Learning Systems” book can provide a solid foundation in production ML engineering that applies to both open and closed ecosystems.

Conclusion: Toward a Layered, Pluralistic AI Ecosystem

The “open vs. closed” framing often oversimplifies a nuanced reality. By late 2025, the AI stack is better described as layered:

- Open models providing a shared, inspectable foundation

- Closed models pushing the frontier and packaging capabilities for enterprises

- Interoperability layers that let applications mix and match across both worlds

For developers, the key is to avoid premature lock‑in, stay conversant with both ecosystems, and design for swap‑ability. For enterprises, the challenge is crafting governance frameworks that balance innovation, risk, and cost without ceding total control to any single vendor. For policymakers, the task is to support healthy competition and open research while mitigating real‑world harms.

As AI becomes the default interface to computing, the outcome of this platform war will shape not only who profits, but also who gets to define the rules of digital society. A pluralistic, interoperable ecosystem—where both open and closed models coexist under clear guardrails—remains the most resilient path forward.

Additional Resources and Further Reading

Technical and Policy References

- arXiv.org — Preprints on large language models, open-source releases, and AI safety

- Hugging Face Papers — Curated list of recent ML and LLM research

- Meta AI Blog — Discussions on open-source AI strategy and model releases

- OpenAI Research — Papers and reports on frontier models and alignment

- European Commission: AI Policy and Regulation

Talks and Videos

- YouTube: Panels on Open-Source vs Closed-Source AI

- YouTube: AI Governance and Regulation Discussions

Professional Perspectives

- Follow AI researchers and practitioners on LinkedIn for evolving viewpoints on open vs. closed AI, especially around deployment in real organizations.

Staying current in this fast‑moving field requires continuous learning. Bookmark key research portals, follow reputable labs and experts, and periodically reassess whether your current mix of open and closed tools still matches your risk profile, regulatory obligations, and strategic goals.