Whisper It: Microsoft's Revelation of New AI Privacy Flaws

Microsoft's Whisper Leak: A New Frontier in AI Vulnerabilities

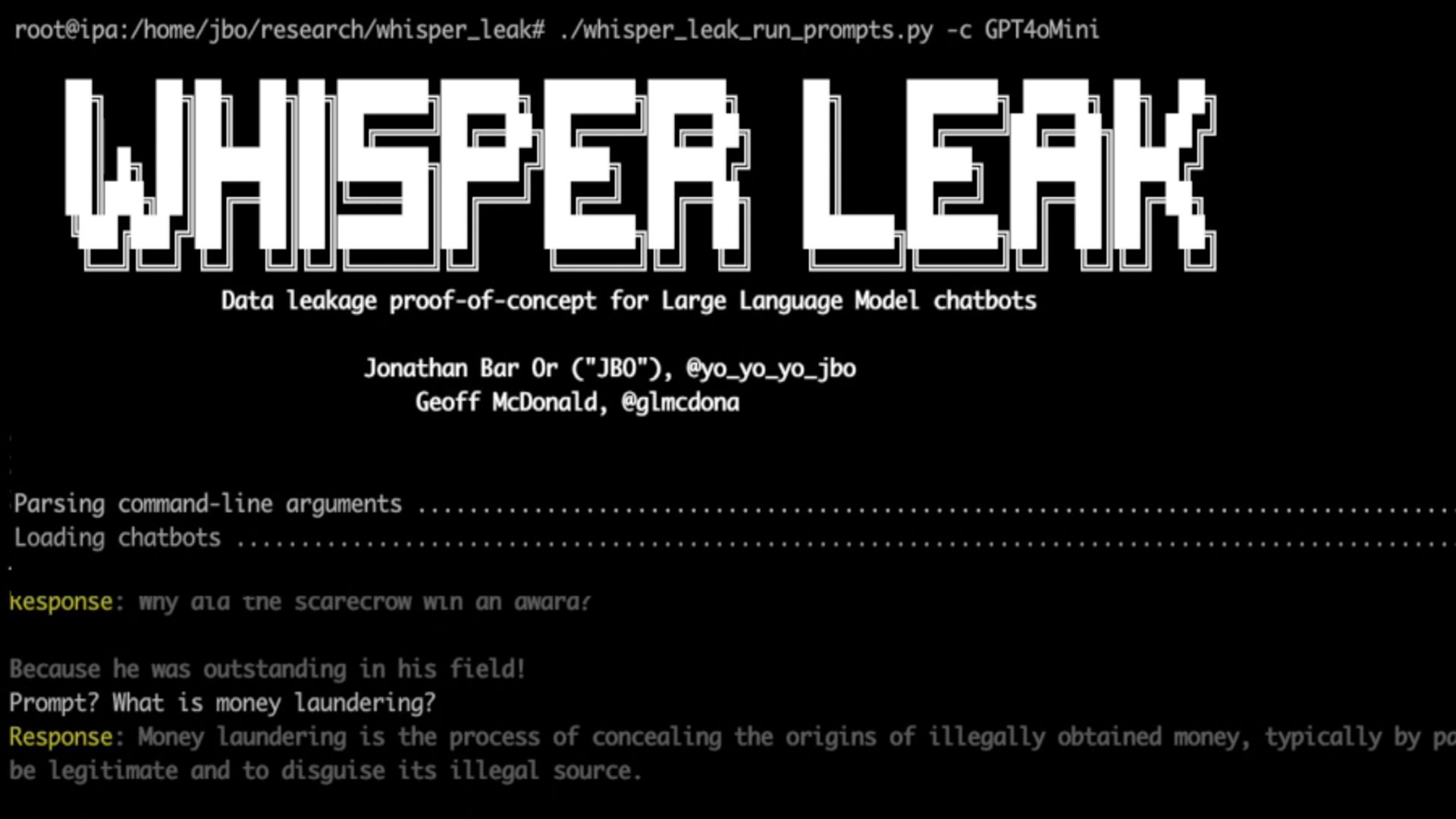

Within the intricate web of today's digital communications, the revelation of Microsoft's Whisper Leak has jolted the tech world. While encryption remains the gold standard for safeguarding information, this new exploit underscores how dedicated adversaries can unearth hidden clues in encrypted data. This discovery prompts critical questions about the integrity of encrypted communications, particularly in the context of large language models running various AI-based applications.Behind the Discovery: What is Whisper Leak?

The Whisper Leak vulnerability represents a significant shift in how AI systems are perceived. By studying subtle leaks in encryption, attackers can infer much about the nature of conversations without having to decrypt the data fully. As most AI systems rely heavily on deep learning-based algorithms, even minuscule leaks can compromise sensitive information.

"Technology, when used without moral guidelines, can lead to catastrophic consequences." - Bill Gates

- Impact on user privacy and data integrity

- Potential methods attackers use to exploit Whisper Leak

- The role of AI firms in mitigating these risks

The Whisper Leak issue is a testament to the constantly evolving nature of cybersecurity threats. While the tech industry races to deploy effective countermeasures, users are urged to remain vigilant about their online interactions, even when they believe systems are secure.

AI Firms React: Emergency Measures and Future Security

AI firms are not taking these revelations lightly. Emergency security protocols are being designed and implemented to fortify existing systems against any potential exploitation. These measures are driven by the urgent need to preserve user trust and ensure the continued safe operation of AI-based services.

Preserving Trust in AI Systems: What Users Can Do

While companies are hard at work addressing these vulnerabilities, users also have a pivotal role to play. Staying informed about the latest security practices and ensuring robust personal security habits can help mitigate the risks posed by such vulnerabilities. Trustworthy sources for further reading include relevant products on Amazon and leading technology professionals on LinkedIn.

For more technical deep dives, interested readers can explore white papers on AI security practices from reputable sources, such as the work by security experts discussed on platforms like ResearchGate or Cybersecurity News.

As the dialogue around digital privacy and cybersecurity continues, society must acknowledge the dual-edged nature of technology, where advancements bring both innovation and challenges. With the tech giants like Microsoft leading the charge in understanding and mitigating these risks, the potential to create a more secure AI-driven future remains promising.