The Foundations of AGI: Unveiling the Pillars of Future Intelligence

Transformers: The Heart of AGI

Bob McGrew, a pivotal figure in OpenAI's success story, has famously helmed the transition from the GPT-3 era to the groundbreaking reasoning models we see today. At the core of this evolution are Transformers, a technology still setting the pace for AGI advancements.

The Innovation Behind Scaled Pre-Training

Scaled pre-training forms the backbone of AGI's learning capabilities, allowing systems to absorb vast amounts of data and create intricate patterns of understanding. This method has been central to OpenAI's approach, pushing the boundaries of what's possible in machine learning.

“The goal of artificial intelligence is to create systems that understand complex patterns and perform tasks without human intervention.” - Bob McGrew

The Importance of Reasoning Models

Reasoning models are the logical component of AGI, enabling machines to make decisions and solve problems efficiently. These models are refined post-training to enhance accuracy and reliability in decision-making processes.

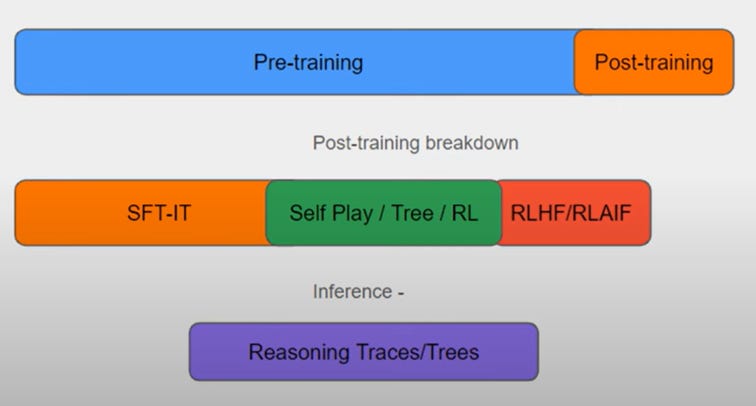

Post-Training: Refining Intelligence

The post-training process refines these models further, simulating a kind of "mental exercise" that helps them adapt to new environments. This stage is crucial for ensuring AI systems remain capable and relevant as data landscapes change.

The Future of AGI: What's Next?

As AGI technology progresses, OpenAI continues to pave the way by not only improving existing models but also exploring new realms of artificial consciousness. With these foundational concepts firmly in place, the future possibilities for AGI development are vast and exciting.

- Explore Amazon's Artificial Intelligence: A Modern Approach for in-depth understanding.

- Read more about OpenAI's initiatives on LinkedIn.

As we continue to explore and innovate, the world eagerly anticipates the dawn of a new intelligence era, where machines and humans collaborate to solve complex challenges and build a better future.